It was somewhere in the closing miles of the first leg of our own journey – a skein of uncertain steps shuffling across the David Cameron overseas trip data – that I red-flagged my hardy companions – that’s you – about a bump in the road, a set of roadblocks blockading what, under other data-design circumstances, could have been a direct route toward calculating the Prime Minister’s trip durations. To wit: had the designer been so minded, a Start and Finish Date field would have been made to come along for the ride on the sheet at the outset, these posting embarkation and return dates for each of the PM’s 116 trips. Subtract Start from Finish (plus one; see last week’s post), then, and trip duration is right there for all to see.

But the data don’t break that way, not with 91 of the trip dates read into the manifest in label terms, e.g.

3-5 July 2011

Question, then: can we get there from here? Can we wrest three days from that miserly text construction above, and its 90 fellow travellers?

Good question, though transparency impels me to confess I ‘m the one who asked it. For one thing, and as previewed by the previous post, it seems to me that the Finish Dates pose the far easier ask – because the text on the other side of the date-offsetting dash own the month and year information, and the specifying properties attaching to both.

That incentive took me to cell E5, the first cell in the Finish Date field we had mapped in the last post. And there I wrote:

=IF(ISNUMBER(A5),A5,(DATEVALUE(RIGHT(A5,LEN(A5)-FIND(“-“,A5)))))

Ok – so what’s this one all about? I goes something like this: if the entry in A5 (in the Date of Trip field) is a number – and again (previous post, again), all the Cameron trips comprising exactly one day assume numeric form, then simply return that number/date in A5. If, on the other hand, A5 holds one of those pertinacious labels with a forged date passport, we need to isolate all the text to the right of its dash, which, if nothing else, looks like a date; then, appearances notwithstanding, we’d brace the isolate with a DATEVALUE, which in fact turns a label imposter into a real live, measurable date.

This formula works in E5; and because it does, I entitled myself to copy it to all other the Finish Date cells, wherein they work, too – until E57, where David Cameron’s trip to Strasbourg hits the runway and comes to rest right by the #VALUE! message – and he was expecting “Bienvenue, Monsieur Prime Minister”.

What happened in E57, and about a dozen other Finish Date cells that resound with the same error message? My standard suspicion – that these faltering cells had been saddled with superfluous spaces in ruinous places that were jamming the formulas – proved wrong.

But what proved right was a small – a very small – difference that typified, and beset, the errant formulas. It’s in the dash, of all things; if you look closer, you’ll note that the problem cells inscribe a dash just a touch lengthier than the one nestled in the formulas that do work. My nested FIND up there asks for this

=FIND(“-“,A5)

And the delinquent cells don’t have that; they have this:

–

And that isn’t the same dash – trust me.

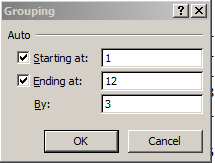

And because it isn’t you can select the Date of Trip range and drive this Find and Replace through it:

(You can actually select the respective dashes in any cells in which they featured, and copy-and-paste them into the appropriate Find and Replace fields.)

And that works, too. Every Finish Date cell now boasts a finish date.

But then there is the matter of the Start Date(s). Extracting whatever is there from the left of every dash in the Date of Trip entries and turning these out in numeric terms is conceivable, e.g. for A5:

=VALUE(LEFT(A5,FIND(“-“,A5)-1))

That is, find the dash (after the makeover endorsed above), grasp all the characters to its left, and reintroduce them to their numeric provenance via VALUE. For A5 we’d get 20. Moving into the Trip Duration field in F, you could press ahead with

=DAY(E5)-D5+1

That is, use DAY to skim the day value from the full-fledged data in Finish Date (and that’s 21 in E5), subtract 20 from it, and add 1 to account for both the 20th and 21st in the duration (again, 21-20=1).

Now rewrite the expression in D5 to read:

=IF(ISNUMBER(A5),A5,VALUE(LEFT(A5,FIND(“-“,A5)-1)))

Again, that IF statement leaves the actual, native dates in the A column as they are; they require no retooling. And remember that the real dates always return 1 as their trip duration (see above), requiring in turn this amendment to the expressions in the Trip Duration field:

=IF(ISNUMBER(A5),1,DAY(E5)-D5+1)

If you’re still with me, let’s understand that this all works – most of the time. But it won’t work for the Date of Trip plunked down in A19 – 30 November- 2 December 2010. In fact, on the one hand, none of the formulaic activity on that row concedes an error message. On the other, what we get for Trip Duration in F19 is -42335 – because the Start Date formula in D19 treats 30 November as an authentic date – from 2015, because that’s precisely what happens when you actually enter 30 November in a cell. Absent a concerted year reference, Excel defaults the year to the one during which you’ve entered it. And the same thing happens in A88-A90. (What’s remarkable here is that the VALUE –not DATEVALUE – function is prepared to turn 30 November into a number – and it does).

Question, then: given the determined inconsistency of the data, is it possible to write a formula that could contend with them all, and succeed in realizing Trip Duration in every case? I’m not exactly sure; but if it is, you’d have to do battle with a rather variegated – and long – set of nested IF contingencies before you could walk away from it with head held high. What, for example, are we do with

31 December 2013-2 January 2014 ?

And if you want to reply that in fact none of the Prime Minister’s trips overarched two years, is that quite the point? You want a formula that handles all comers – actual or potential. Can it be written?

Over to you, learned colleague.